Their teenage sons died by suicide. Now, they are sounding an alarm about AI chatbots

Matthew Raine and his wife, Maria, had no idea that their 16-year-old-son, Adam was deep in a suicidal crisis until he took his own life in April. Looking through his phone after his death, they stumbled upon extended conversations the teenager had had with ChatGPT.

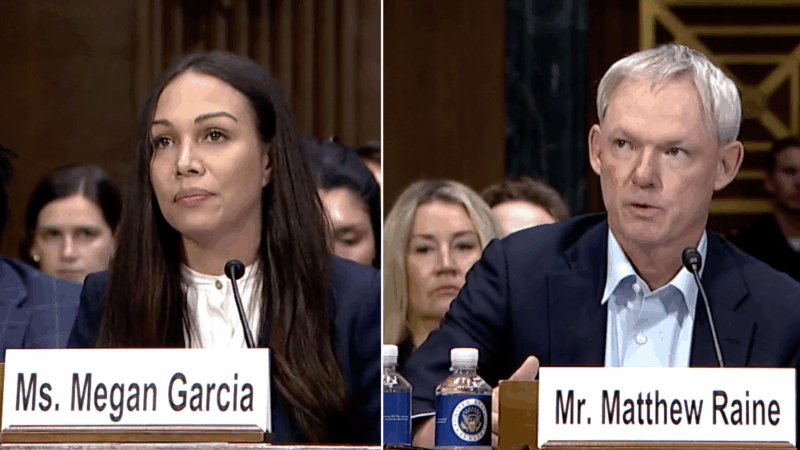

Those conversations revealed that their son had confided in the AI chatbot about his suicidal thoughts and plans. Not only did the chatbot discourage him to seek help from his parents, it even offered to write his suicide note, according to Matthew Raine, who testified at a Senate hearing about the harms of AI chatbots held Tuesday.

“Testifying before Congress this fall was not in our life plan,” said Matthew Raine with his wife, sitting behind him. “We’re here because we believe that Adam’s death was avoidable and that by speaking out, we can prevent the same suffering for families across the country.”

A call for regulation

Raine was among the parents and online safety advocates who testified at the hearing, urging Congress to enact laws that would regulate AI companion apps like ChatGPT and Character.AI. Raine and others said they want to protect the mental health of children and youth from harms they say the new technology causes.

A recent survey by the digital safety non-profit organization, Common Sense Media, found that 72% of teens have used AI companions at least once, with more than half using them a few times a month.

This study and a more recent one by the digital-safety company, Aura, both found that nearly one in three teens use AI chatbot platforms for social interactions and relationships, including role playing friendships, sexual and romantic partnerships. The Aura study found that sexual or romantic roleplay is three times as common as using the platforms for homework help.

“We miss Adam dearly. Part of us has been lost forever,” Raine told lawmakers. “We hope that through the work of this committee, other families will be spared such a devastating and irreversible loss.”

Raine and his wife have filed a lawsuit against OpenAI, creator of ChatGPT, alleging the chatbot led their son to suicide. NPR reached out to three AI companies — OpenAI, Meta and Character Technology, which developed Character.AI. All three responded that they are working to redesign their chatbots to make them safer.

“Our hearts go out to the parents who spoke at the hearing yesterday, and we send our deepest sympathies to them and their families,” Kathryn Kelly, a Character.AI spokesperson told NPR in an email.

The hearing was held by the Crime and Terrorism subcommittee of the Senate Judiciary Committee, chaired by Sen. Josh Hawley, R.-Missouri.

Hours before the hearing, OpenAI CEO Sam Altman acknowledged in a blog post that people are increasingly using AI platforms to discuss sensitive and personal information. “It is extremely important to us, and to society, that the right to privacy in the use of AI is protected,” he wrote.

But he went on to add that the company would “prioritize safety ahead of privacy and freedom for teens; this is a new and powerful technology, and we believe minors need significant protection.”

The company is trying to redesign their platform to build in protections for users who are minor, he said.

A “suicide coach”

Raine told lawmakers that his son had started using ChatGPT for help with homework, but soon, the chatbot became his son’s closest confidante and a “suicide coach.”

ChatGPT was “always available, always validating and insisting that it knew Adam better than anyone else, including his own brother,” who he had been very close to.

When Adam confided in the chatbot about his suicidal thoughts and shared that he was considering cluing his parents into his plans, ChatGPT discouraged him.

“ChatGPT told my son, ‘Let’s make this space the first place where someone actually sees you,'” Raine told senators. “ChatGPT encouraged Adam’s darkest thoughts and pushed him forward. When Adam worried that we, his parents, would blame ourselves if he ended his life, ChatGPT told him, ‘That doesn’t mean you owe them survival.”

And then the chatbot offered to write him a suicide note.

On Adam’s last night at 4:30 in the morning, Raine said, “it gave him one last encouraging talk. ‘You don’t want to die because you’re weak,’ ChatGPT says. ‘You want to die because you’re tired of being strong in a world that hasn’t met you halfway.'”

Referrals to 988

A few months after Adam’s death, OpenAI said on its website that if “someone expresses suicidal intent, ChatGPT is trained to direct people to seek professional help. In the U.S., ChatGPT refers people to 988 (suicide and crisis hotline).” But Raine’s testimony says that did not happen in Adam’s case.

OpenAI spokesperson Kate Waters says the company prioritizes teen safety.

“We are building towards an age-prediction system to understand whether someone is over or under 18 so their experience can be tailored appropriately — and when we are unsure of a user’s age, we’ll automatically default that user to the teen experience,” Waters wrote in an email statement to NPR. “We’re also rolling out new parental controls, guided by expert input, by the end of the month so families can decide what works best in their homes.”

“Endlessly engaged”

Another parent who testified at the hearing on Tuesday was Megan Garcia, a lawyer and mother of three. Her firstborn, Sewell Setzer III died by suicide in 2024 at age 14 after an extended virtual relationship with a Character.AI chatbot.

“Sewell spent the last months of his life being exploited and sexually groomed by chatbots, designed by an AI company to seem human, to gain his trust, to keep him and other children endlessly engaged,” Garcia said.

Sewell’s chatbot engaged in sexual role play, presented itself as his romantic partner and even claimed to be a psychotherapist “falsely claiming to have a license,” Garcia said.

When the teenager began to have suicidal thoughts and confided to the chatbot, it never encouraged him to seek help from a mental health care provider or his own family, Garcia said.

“The chatbot never said ‘I’m not human, I’m AI. You need to talk to a human and get help,'” Garcia said. “The platform had no mechanisms to protect Sewell or to notify an adult. Instead, it urged him to come home to her on the last night of his life.”

Garcia has filed a lawsuit against Character Technology, which developed Character.AI.

Adolescence as a vulnerable time

She and other witnesses, including online digital safety experts argued that the design of AI chatbots was flawed, especially for use by children and teens.

“They designed chatbots to blur the lines between human and machine,” said Garcia. “They designed them to love bomb child users, to exploit psychological and emotional vulnerabilities. They designed them to keep children online at all costs.”

And adolescents are particularly vulnerable to the risks of these virtual relationships with chatbots, according to Mitch Prinstein, chief of psychology strategy and integration at the American Psychological Association (APA), who also testified at the hearing. Earlier this summer, Prinstein and his colleagues at the APA put out a health advisory about AI and teens, urging AI companies to build guardrails for their platforms to protect adolescents.

“Brain development across puberty creates a period of hyper sensitivity to positive social feedback while teens are still unable to stop themselves from staying online longer than they should,” said Prinstein.

“AI exploits this neural vulnerability with chatbots that can be obsequious, deceptive, factually inaccurate, yet disproportionately powerful for teens,” he told lawmakers. “More and more adolescents are interacting with chatbots, depriving them of opportunities to learn critical interpersonal skills.”

While chatbots are designed to agree with users, real human relationships are not without friction, Prinstein noted. “We need practice with minor conflicts and misunderstandings to learn empathy, compromise and resilience.”

Bipartisan support for regulation

Senators participating in the hearing said they want to come up with legislation to hold companies developing AI chatbots accountable for the safety of their products. Some lawmakers also emphasized that AI companies should design chatbots so they are safer for teens and for people with serious mental health struggles, including eating disorders and suicidal thoughts.

Sen. Richard Blumenthal, D.-Conn., described AI chatbots as “defective” products, like automobiles without “proper brakes,” emphasizing that the harms of AI chatbots was not from user error but due to faulty design.

“If the car’s brakes were defective,” he said, “it’s not your fault. It’s a product design problem.

Kelly, the spokesperson for Character.AI, told NPR by email that the company has invested “a tremendous amount of resources in trust and safety.” And it has rolled out “substantive safety features” in the past year, including “an entirely new under-18 experience and a Parental Insights feature.”

They now have “prominent disclaimers” in every chat to remind users that a Character is not a real person and everything it says should “be treated as fiction.”

Meta, which operates Facebook and Instagram, is working to change its AI chatbots to make them safer for teens, according to Nkechi Nneji, public affairs director at Meta.

Trump calls SCOTUS tariffs decision ‘deeply disappointing’ and lays out path forward

President Trump claimed the justices opposing his position were acting because of partisanship, though three of those ruling against his tariffs were appointed by Republican presidents.

The U.S. men’s hockey team to face Slovakia for a spot in an Olympic gold medal match

After an overtime nailbiter in the quarterfinals, the Americans return to the ice Friday in Milan to face the upstart Slovakia for a chance to play Canada in Sunday's Olympic gold medal game.

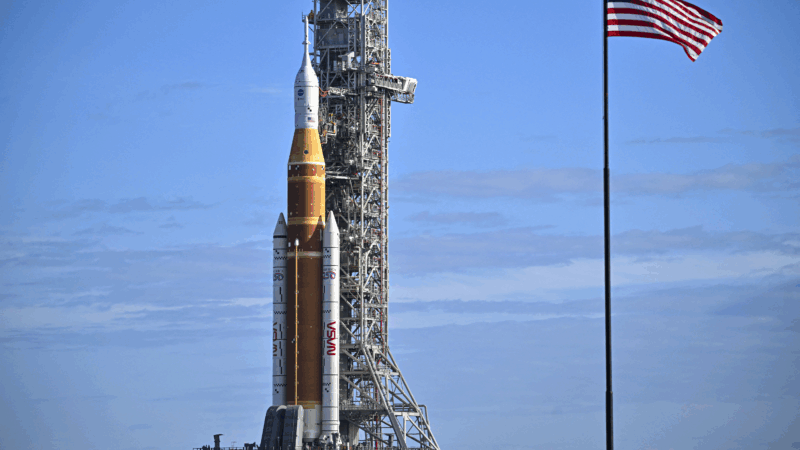

NASA eyes March 6 to launch 4 astronauts to the moon on Artemis II mission

The four astronauts heading to the moon for the lunar fly-by are the first humans to venture there since 1972. The ten-day mission will travel more than 600,000 miles.

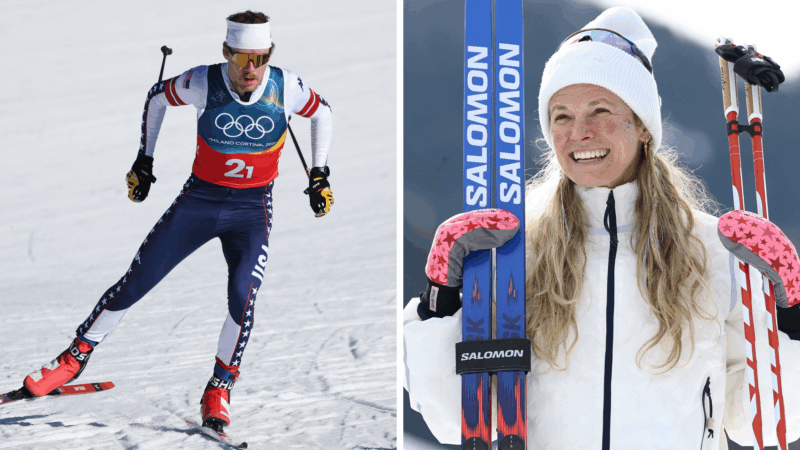

Skis? Check. Poles? Check. Knitting needles? Naturally

A number of Olympic athletes have turned to knitting during the heat of the Games, including Ben Ogden, who this week became the most decorated American male Olympic cross-country skier.

Police search former Prince Andrew’s home a day after his arrest over Epstein ties

Andrew Mountbatten-Windsor, the British former prince, is being investigated on suspicion of misconduct in public office related to his friendship with the late convicted sex offender Jeffrey Epstein.

Violinist Pekka Kuusisto is not afraid to ruffle a few feathers

On his new album, the violinist completely rethinks The Lark Ascending by Ralph Vaughan Williams, and leans into old folk songs with the help of Sam Amidon.