As AI advances, doomers warn the superintelligence apocalypse is nigh

What happens when we make an artificial intelligence that’s smarter than us? Some AI researchers have long warned that moment will mean humanity’s doom.

Now that AI is rapidly advancing, some “AI Doomers” say it’s time to hit the brakes. They say the machine learning revolution that led to everyday AI models such as ChatGPT has also made it harder to figure out how to “align” artificial intelligence with our interests – namely, keeping AI from outsmarting humans. Researchers into AI safety say there’s a chance that such a superhuman intelligence would act quickly to wipe us out.

NPR’s Martin Kaste reports on the tensions in Silicon Valley over AI safety.

For a more detailed discussion on the arguments for — and against — AI doom, please listen to this special episode of NPR Explains:

And for the truly curious, a reading list:

The abbreviated version of the “Everyone Dies” argument, in the Atlantic.

The “useful idiots” rebuttal, also in the Atlantic

The potential timeline of an AI takeover

Research into “AI Faking” and deception

Maybe AI isn’t speeding up smarter AI, at least not yet. Research from METR

Analysis — and skepticism — from experts about the near-term likelihood of human- or superhuman-level artificial intelligence

Transcript:

ARI SHAPIRO, HOST:

Way back in the year 2011, this program aired a story about the risk of something called artificial intelligence. We profiled an organization trying to prepare for the possibility of a superhuman AI – a machine so smart it might decide to get rid of slower-witted humans. Back then, that was science fiction. And now, well, NPR’s Martin Kaste went back to see how those computer researchers rate our chances in 2025.

(SOUNDBITE OF ARCHIVED RECORDING)

UNIDENTIFIED ANNOUNCER: This is the main event of the evening.

(CHEERING)

MARTIN KASTE, BYLINE: Welcome to a demo night in downtown San Francisco. Competitive events like this are a big part of the AI boom in this town right now – a chance for new developers to show off their new AI apps and maybe attract investors.

JONATHAN LIU: Yeah, my name is Jonathan Liu, and I’m the founder of Cupidly, which is an AI agent that swipes for you on Hinge.

KASTE: You describe your ideal mate to the Cupidly AI, and it goes into the dating app for you to find a match – or it did. Liu has now shut it down because app users were getting banned by the Hinge dating app. But Liu is typical of this crowd in his bullishness about AI and the prospect of AI eventually becoming as smart or even smarter than humans.

LIU: I think, once we do get super intelligence, hopefully, we’ll live in a utopia where nobody has to actually work ever again.

KASTE: But almost in the same breath, Liu also says that he sees a possibility that superhuman AI could end up killing off all of humanity. And he’s not kidding. Just ask him for his p(doom). It’s a term he’ll recognize because it’s sort of joking AI slang for estimated probability of AI doom.

LIU: What’s my p(doom)? I would say around 50%.

KASTE: And yet, you’re smiling about it?

LIU: I’m smiling about it because there’s nothing we can do about it.

KASTE: This strange mix of optimism and fatalism has long been a part of the AI world. Even the CEOs of OpenAI and Anthropic – two of the most important AI companies – signed a public statement a couple of years ago that acknowledged the, quote, “risk of extinction from AI.” And the reason for this is a pretty straightforward logical problem. If they were to build something that’s smarter than us, how would they keep it on our side?

(SOUNDBITE OF MUSIC)

KASTE: That problem is called alignment as in how to align AI with human values. And here in Berkeley, near the UC campus, there’s now a cluster of people working on that problem and related AI questions. Nate Soares is president of MIRI. That’s the Machine Intelligence Research Institute. That’s the newer name for an AI alignment organization that NPR first visited back in 2011.

NATE SOARES: I spent quite a number of years – maybe about 10 years – trying to figure out how to make AI go well. And for a bunch of reasons, that’s been going poorly.

KASTE: Soares has now given up on trying to figure out that alignment riddle. He says the machine-learning revolution of the last few years, which created ChatGPT and the like, is now moving things too fast towards superhuman AI. And he gets a little comfort from the fact that this also means there are now many more researchers here who are focused on AI safety.

SOARES: Yeah. I mean, for one thing, I would not call it AI safety. I would say, you know, safety is for seat belts, and if you’re in a car, sort of careening towards a cliff edge, you wouldn’t say, hey, let’s talk about car safety here. You would say, let’s stop going over the cliff edge.

KASTE: That cliff, as Soares puts it, is a scenario in which AI gets more closely involved in helping to improve AI – accelerating a kind of feedback loop of self-improving artificial intelligence that ends up leaving humans behind as uncomprehending spectators and then perhaps just obstacles to be swept aside. And that’s why Soares and another MIRI colleague have given up on alignment and are instead going the last-ditch route of publishing a book that begs humanity to slam on the brakes.

SOARES: The title of the book is, “If Anyone Builds It, Everyone Dies.”

KASTE: Let that sink in and look around you. Does this all go away, really, in a few years?

SOARES: I mean, I can’t tell you when, but it could be a couple of years, could be a dozen years. But yeah, this around us is what’s at stake.

KASTE: It’s an extreme vision. Some critics say it’s overblown, that the current AI training methods can’t even achieve human-level intelligence, let alone super intelligence. Others say the doomers are unwittingly hyping AI. One writer in The Atlantic ridiculed Soares and his coauthor as useful idiots whose doomsaying makes AI look more powerful than it really is. And it’s also just a lot to ask to get a booming, new tech sector to restrain itself, maybe with government intervention.

MARK BEALL: In D.C. right now, the conversation on AI is still very, very early.

KASTE: Mark Beall is president of government affairs for the AI Policy Network, a lobbying organization.

BEALL: There does seem to be at least an appetite to start measuring the risks and start to examine more carefully, you know, what threshold, what alarm bell might need to go off that would change that assumption about whether or not we ought to consider something as drastic as a pause.

KASTE: Government restrictions seem unlikely to Jim Miller. He’s an economist at Smith College who’s focused on the game theory aspect of AI development. He sees this as quickly turning into a race.

JIM MILLER: If I am Elon Musk, I can say, you know what? I don’t know if racing a super intelligence is going to kill everyone or not. But if it is going to kill everyone, and I don’t do it, someone else will. And if I end up killing everyone, I’ve maybe taken off a couple of weeks ’cause OpenAI would have done it a week later. And then Trump and Vance can say, yeah, maybe this will kill everyone, but if we don’t do it, China will.

KASTE: And for Miller, this isn’t just an academic question. In his own life, he’s decided to put off a risky surgery to correct a potentially fatal condition in his brain because he’s an AI doomer, and he’s convinced that a superhuman AI is likely to end human civilization in the next few years. Or if he’s very lucky that superhuman AI will spare us and then offer him a safer treatment.

(SOUNDBITE OF BELL GONGING)

KASTE: On the campus of UC Berkeley, the generation with the most at stake are setting up the information tables for their student clubs. At the table for the club devoted to AI safety, Adi Mehta says he has heard the doomer argument, but he’s focused on AI’s more immediate risks.

ADI MEHTA: One thing that is more apparent for college students is that I can’t remember the last time I did an assignment without using AI. It’s automating a lot of our thinking away, which personally, that’s, like, a pretty big fear.

KASTE: Another club member, Natalia Trounce, says she’s also just not that focused on doom.

NATALIA TROUNCE: I think many things are possible, but it seems like it’s not the most likely scenario at this stage.

KASTE: If I were to ask the average Berkeley student, is this, like, my life’s going to be over in three years, so just have fun now? Or is it just…

TROUNCE: I feel like if it was, like, a three years, it’s going to be over, like, it would have happened already.

KASTE: Walking back to the offices of MIRI, Nate Soares admits that with AI already such a normal part of life here, it’s hard to convince people that we’re about to go over that cliff. He says one hope is that maybe the rise of superhuman AI will be just gradual enough to be noticed and give people some time to react.

SOARES: Maybe it doesn’t take a ton. Maybe the AI is doing a little better, getting a little smarter, getting a little bit more competent, getting a little bit more reliable. Maybe that’ll make people a lot more spooked. I don’t know.

KASTE: And maybe, just maybe, he and his fellow AI doomers are wrong about the danger. He says he would love to be wrong, but he doubts he is. Martin Kaste, NPR News, Berkeley, California.

The candy heir vs. chocolate skimpflation

The grandson of the Reese's Peanut Butter Cups creator has launched a campaign against The Hershey Company, which owns the Reese's brand. He wants them to stop skimping on ingredients.

Scientists make a pocket-sized AI brain with help from monkey neurons

A new study suggests AI systems could be a lot more efficient. Researchers were able to shrink an AI vision model to 1/1000th of its original size.

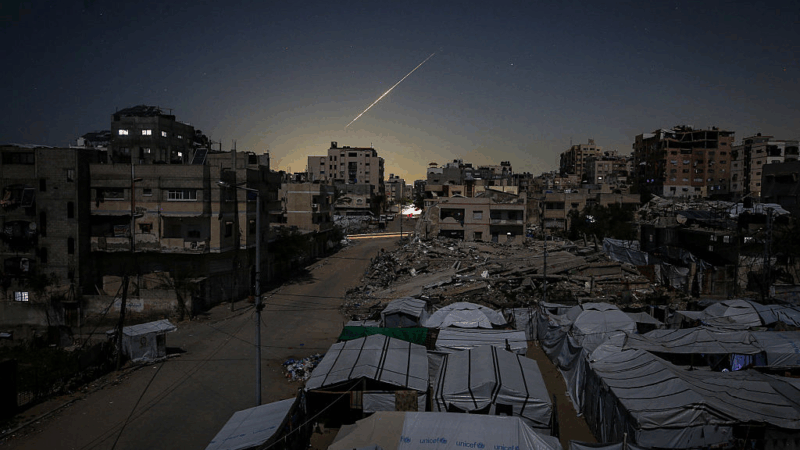

U.S. evacuates diplomats, shuts down some embassies as war enters fourth day

The United States evacuated diplomats across the Middle East and shut down some embassies as war with Iran intensified Tuesday while President Trump signaled the conflict could turn into extended war.

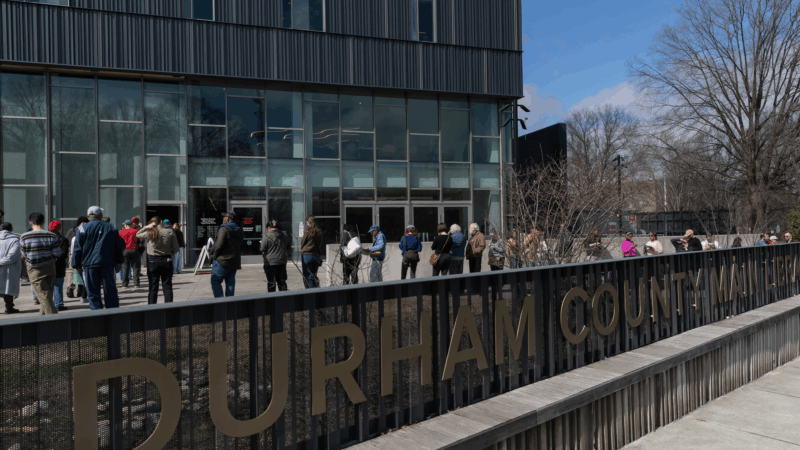

North Carolina and Texas have primary elections Tuesday. Here’s what you need to know

The midterm elections are officially underway and contests in Texas and North Carolina will be the first major opportunity for parties to hear from voters about what's important to them in 2026.

Kristi Noem set to face senators over DHS shutdown, immigration enforcement

The focus of the hearing is likely to be on how Kristi Noem is pursuing President Trump's mass deportation efforts in his second term, after two U.S. citizens were killed by immigration officers.

College students, professors are making their own AI rules. They don’t always agree

More than three years after ChatGPT debuted, AI has become a part of everyday life — and professors and students are still figuring out how or if they should use it.